- #DOCKER RUN IMAGE WITH BASH INSTALL#

- #DOCKER RUN IMAGE WITH BASH UPDATE#

- #DOCKER RUN IMAGE WITH BASH CODE#

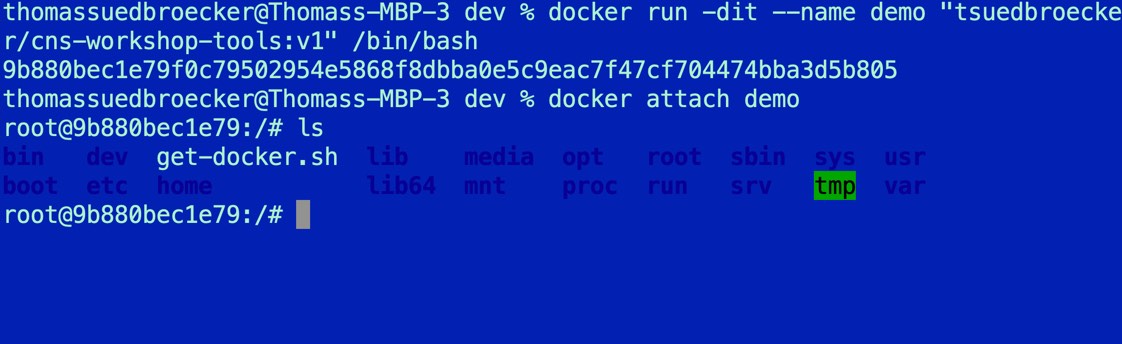

You can keep running these steps, commenting out your Dockerfile, dropping into a shell, and figuring out problematic commands, until your Docker images builds perfectly. If you're not sure if a command exited properly or not, run $?: # First run docker run -it my-image bash to get to the shell You can now drop into your Docker image and start interactively running commands! docker run -it my-image bashįrom here, one by one, you can start debugging your RUN commands to see what went wrong.

#DOCKER RUN IMAGE WITH BASH UPDATE#

Step 3/3 : RUN conda update conda & conda clean -all -yes

#DOCKER RUN IMAGE WITH BASH INSTALL#

Step 2/3 : RUN apt-get update -y apt-get upgrade -y apt-get install -y vim-tiny vim-athena build-essential Step 1/3 : FROM continuumio/miniconda3:latest Then what you will see is: Sending build context to Docker daemon 2.048kB Now what we can do is to comment out that troublesome Dockerfile RUN command. It won't be there because it wasn't successfully built. You can confirm that your Docker image wasn't built by running docker images and checking for my-image. The command '/bin/sh -c exit 1' returned a non-zero code: 1 Step 3/4 : RUN conda update conda & conda clean -all -yes

Step 2/4 : RUN apt-get update -y apt-get upgrade -y apt-get install -y vim-tiny vim-athena build-essential Step 1/4 : FROM continuumio/miniconda3:latest Sending build context to Docker daemon 2.048kB This will get you an output that looks like: (base) ➜ my-image docker build -t my-image. So to illustrate this point I have made our Dockerfile have a RUN command that exits with 1.

#DOCKER RUN IMAGE WITH BASH CODE#

In a UNIX shell, the exit code 0 means that all is well with a command. (-t is for tag) Docker will run through each of your RUN steps, and stop when it gets to a command that does not exit properly.

Normally what happens is that when running docker build -t my-image. Let's say you have a Dockerfile for an image you are trying to build. Treating your Docker image like a regular shell will come in handy when trying to debug Docker builds. It's also great for my most common "I don't want to install this to my computer" use case. # From Hostĭocker run -it continuumio/miniconda3:latest bashĬool, huh? This is perfect for debugging a container that absolutely should be working properly. Then run a few commands to make sure that you are in fact in that shell.

For me this is Python, and specifically I like conda. Google your favorite programming language's Docker up. # docker run -it continuumio/miniconda3:latest bashīut keep reading for more. Long story short, you can tell Docker to run the command bash, which drops you into a shell: docker run -it name-of-image bash But a lot of times I'm throwing a Dockerfile in a GitHub repo so that I don't have to install CLIs that I just know will eventually conflict on my laptop. Sometimes I'm using it for something cooler like a distributed computing project. Most of the time when I use Docker I am using it to package and distribute an application. You can even get really crazy and run a VM that is then running Docker. Maybe that computer is an EC2 instance or a laptop. Or connect to it with SSH and then treat it like a regular shell.ĭocker is no different! You are running a computer inside some other computer. I'm going to let you in on a DevOps secret here: The thing all DevOpsy people love to do is build a super fancy and complex system, then find a way to deal with it like a regular shell.

0 kommentar(er)

0 kommentar(er)